Agents in Generative AI

A more personalized student-centered experience at scale.

The path to access and success is paved with innovative, interdisciplinary efforts that democratize education, ensuring every student benefits from personalized learning experiences.

Agents

Agents in generative AI are semi-autonomous entities that collaborate and interact dynamically allowing them to solve complex problems and combine specialized capabilities for greater efficiency and adaptability.

More practically, an agent can be thought of as a GPT designed for a specific, narrow task.

An Agentic Framework is a workflow system that allows these Agents to work together through clearly defined communication protocols and structured workflows such as approvals, loops, and external integrations.

General Characteristics of an Agent (like a GPT):

- Specialization: Each agent has a specific role or expertise, such as generating content, analyzing data, or orchestrating tasks.

- Collaboration: Agents communicate and work together via an Agentic Framework such as AutoGen.

- Reusability: Agents can function independently or as part of a larger agent team.

Imagine a Course Builder AI Using this Agentic Framework

Now that we’ve explored the foundational concepts of agents, let's see how they come together in a real-world application with various roles. Imagine a Course Builder AI—a system designed to streamline and automate the course creation process. By leveraging specialized agents, this AI can efficiently draft course structures, generate learning materials, and manage approvals, all while ensuring alignment with institutional standards.

Here’s how these agents could contribute to the workflow:

- Facilitator: Oversees other agents, maps out all the steps for drafting an ASU course, and manages the approval workflow for course creation.

- Outline & Learning Objectives Creator: Reviews course content, suggests modules, outlines lecture topics, creates learning objectives, proposes discussion points, and designs assessment/grading elements.

- Syllabus Creator: Creates a syllabus for the course given the outline Module Creator: Drafts a module based on the course content

- Lecture Scripter: Develops lecture scripts and presentation flows

- Assessment Creator: Designs specific assessments for each module based on its content.

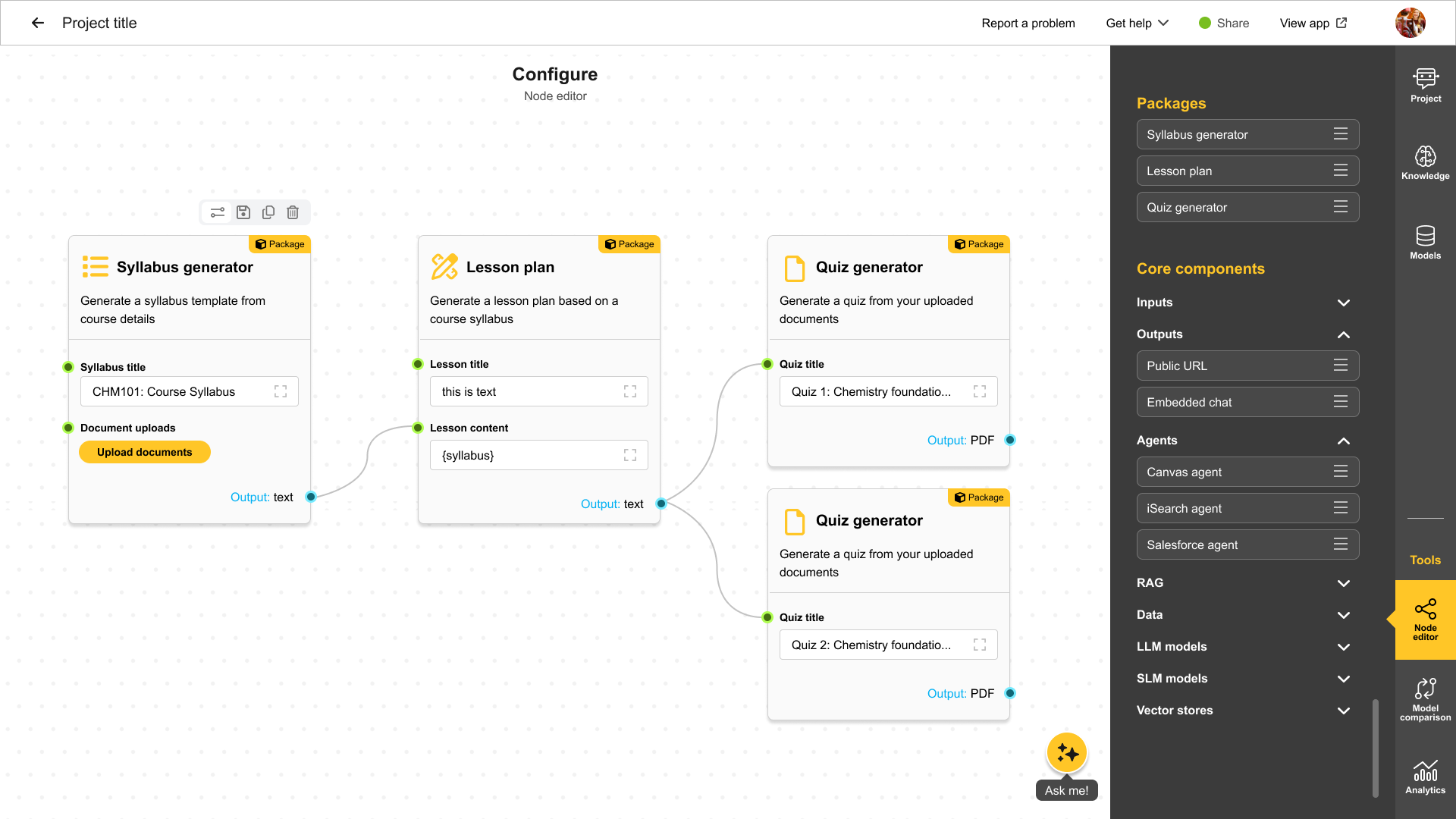

The Future of CreateAI and MyAI Builder

The future of AI-driven workflows moves beyond traditional interfaces, enabling more dynamic and integrated experiences. By leveraging advanced models, retrieving relevant data, and incorporating enterprise tools, this approach enhances automation, adaptability, and user interaction across multiple platforms. Below are key elements shaping this evolution:

- Flow Builder Instead of a Form-Based UI

- Stitch together multiple LLM models

- Fetch data from multiple RAG indexes

- Mix between multimodal models

- Create & Integrate Agents into your flow

- Enterprise Tools for Agents integrating Enterprise data sources (Canvas, iSearch, Salesforce, Data Warehouse etc)

- Custom tools enabling integration of any external source

- Multiple different experience options (Chat, Canvas, Webpage, Push, SMS, Slack etc)

- Context enrichment from Peoplesoft/Canvas/Salesforce/Warehouse

- Small Language Models

Keep Reading

Students Using CreateAI: What Happens When You Upload a File?

If you’re using a CreateAI chatbot as part of a class, research, or student service, you may have the option to upload files like PDFs, assignments, or notes into the chat. Here’s what you need to know about how your files are handled and your privacy is protected.

CreateAI Builder: What Faculty Should Know About Student File Uploads

If you’ve created a custom bot using CreateAI Builder for students to use whether for advising, coursework, or academic support, here's what you need to know about how student file uploads are handled and what safeguards are in place.

This guide covers storage, privacy, copyright, and recommended practices for faculty-led bots.

CreateAI Office Hours Details: Get Help and Share Feedback

We’re excited to share how you can connect directly with the CreateAI team for live support, feedback, and community learning. Our weekly office hours are designed to help both general users and our dedicated beta testers get the most out of CreateAI Builder.