Breakdown of RAG Model Parameters, Settings and Their Impact

The core architecture of RAG consists of two main components:

- Retriever: This component searches for and extracts relevant data from a large knowledge corpus based on a given query. It leverages algorithms like BM25 or dense vector search (using embeddings generated by models such as BERT) to identify the most contextually relevant content.

- Generator: Once relevant data is retrieved, the generator synthesizes a coherent and contextually appropriate response by incorporating the information into its output. Popular models for this component include GPT-based architectures, which are fine-tuned to produce responses that sound natural and informative.

The primary benefit of RAG lies in its ability to extend the capabilities of traditional generative models, allowing them to access and utilize updated or domain-specific knowledge that may not be part of their pre-trained dataset. This makes RAG particularly powerful for applications like chatbots and information retrieval systems where users need specific, current, or highly contextual information.

| Parameter Name | Reference Image | Function | How It Affects the Chatbot | What It Means to the RAG Model | Optimum Level/Setting |

|---|---|---|---|---|---|

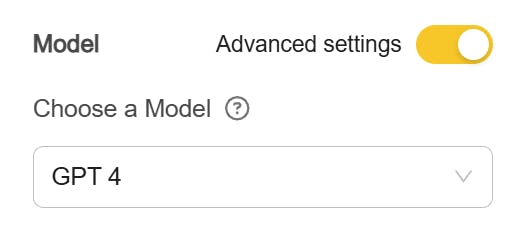

Model |  | The specific generative language model used in the RAG framework (e.g., GPT-4, Claude AI). | Determines the quality, coherence, and fluency of chatbot responses. | Acts as the generative component that synthesizes answers using retrieved data. | Use the latest robust versions (e.g: GPT-4o, latest Claude Model) for high-quality responses. |

Custom Instructions / System Prompt |  | Guides the model’s behavior by setting initial conditions or constraints. | Enhances relevance, consistency, and alignment of chatbot responses with desired communication style. | Aligns the generation phase with specific user/system needs for tailored outputs. | Detailed and clear instructions, concise yet comprehensive, without excessive detail. |

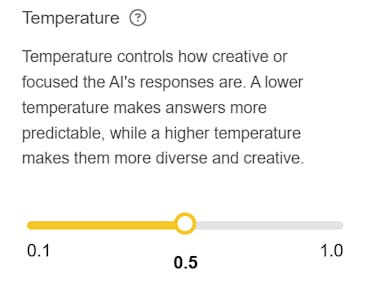

Temperature |  | Controls randomness or creativity of the model's output. | Influences response variability and creativity; lower settings result in more predictable outputs. | Adjusts how confidently the model selects from its word distribution during generation. | 0.2 - 0.5 for precise outputs; 0.7 or above for more creative responses. |

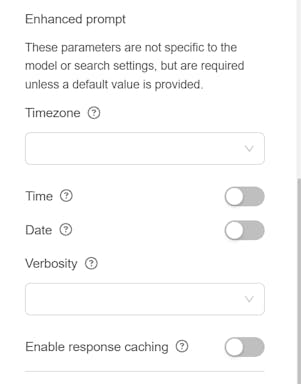

Timezone |  | Sets the time zone context for responses and data retrieval. | Ensures time-specific queries are accurately interpreted and responded to based on the user's local time. | Helps the model align retrieved information with the correct temporal context, enhancing response accuracy for time-based queries. | Set to the user's local timezone or where the primary audience is located. |

Time |  | Indicates whether the current time should be factored into responses. | Allows the chatbot to provide time-relevant responses, such as deadlines or schedule-based answers. | Enables the RAG model to contextualize retrieved information with time-specific content. | Toggle on when the chatbot needs to handle time-based queries |

Date |  | Specifies whether the current date should be included in responses. | Allows the chatbot to reference dates accurately for tasks involving scheduling, deadlines, or event timelines. | Helps align retrieval and generation with date-specific data for more contextual output. | Toggle on for date-dependent tasks or information requests. |

Verbosity |  | Controls the length and detail of the responses generated by the model. | Influences whether the chatbot gives brief, concise responses or detailed, comprehensive answers. | Affects the output granularity during the response generation phase, determining how detailed the retrieved and synthesized content should be. | Adjust based on the task requirements: concise for quick answers, more detailed for in-depth explanations. |

Enable Response Caching |  | Allows the caching of generated responses for reuse, optimizing performance. | Speeds up response time for repeated or similar queries and reduces computational load. | Improves efficiency by reusing previously generated outputs when relevant, reducing redundant data retrieval and synthesis. | Enable for scenarios with repetitive queries to improve speed and reduce resource usage. |

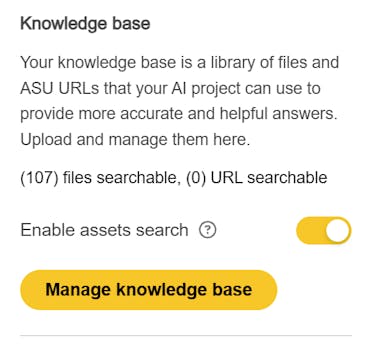

Knowledge Base |  | The database or repository from which the RAG model retrieves relevant documents and information. | Directly affects the quality and relevance of the chatbot’s responses, as the retrieved data comes from this source. | The knowledge base is a core part of the retrieval component in RAG, allowing the model to augment generative outputs with real-time, context-specific data. | Ensure the knowledge base is kept up-to-date and contains all necessary and reliable documents to support user queries. |

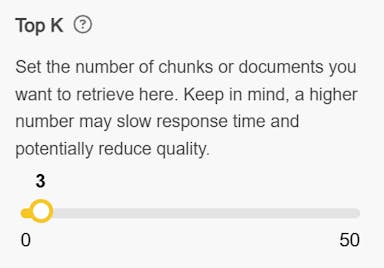

Top K |  | Sets the number of chunks or documents to retrieve in response to a query. | Affects response quality and performance; retrieving more chunks provides comprehensive answers but may slow down response time and reduce precision. | Controls the scope of the retrieval phase by defining how many data pieces are considered, which impacts the completeness and depth of generated outputs. | Typically set between 3 and 5 for a balance between quality and performance; higher values can be tested based on data needs. |

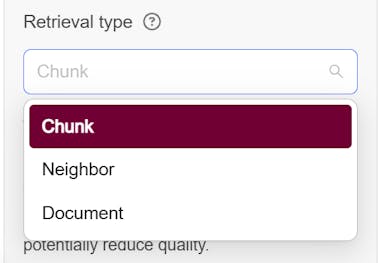

Retrieval Type |  | Specifies how the model retrieves data from the knowledge base (e.g., chunk-based retrieval). | Determines the granularity and structure of the retrieved data, impacting how the chatbot forms its responses. | Influences how the model segments and pulls relevant pieces of information to combine during the generation phase. | Set to "Chunk" for detailed, context-specific answers, or other types based on specific use cases. |

Enable Assets Search | Allows the RAG model to include searches for specific assets or media types (e.g., images, PDFs, or other documents) during the retrieval phase. | Enhances the chatbot’s ability to provide more detailed and relevant responses by including references or visual content. | Expands the retrieval capabilities of the RAG model, ensuring that not only textual data but also multimedia and documents are considered in responses. | Enable when a wider range of asset types is needed for comprehensive responses; disable if only text-based retrieval is required for simplicity. | |

Expressions | Allows the definition of custom filters using metadata to refine search results. | Enhances response accuracy by filtering retrieved content based on specified metadata criteria. | Supports targeted retrieval by limiting search results to meet custom criteria, improving relevance. | Use custom expressions as needed for specific query refinement; leaving it blank is acceptable for general searches. |

Recommended Resources

1) Original White Paper on RAG

- Title: "Retrieval-Augmented Generation for Knowledge-Intensive NLP Tasks"

- Authors: Patrick Lewis, Ethan Perez, Aleksandara Piktus, et al.

- Summary: This seminal paper introduces the RAG model, explaining its architecture and advantages over traditional models. It also details use cases and empirical results that illustrate the model's capabilities.

- Link: Access via arXiv

2) White Paper on Dense Retrieval and Its Role in RAG

- Title: "Dense Passage Retrieval for Open-Domain Question Answering"

- Authors: Vladimir Karpukhin, Barlas Oguz, et al.

- Summary: This paper discusses dense retrieval methods and their applications in enhancing retrieval-based models like RAG.

- Link: Access via arXiv

3) Article: Introduction to Retrieval-Augmented Generation

- Source: Hugging Face Blog

- Summary: Provides a beginner-friendly overview of RAG, covering its key concepts and practical applications. The article also includes sample code snippets to help readers get started with building RAG models.

- Link: Visit Hugging Face Blog

4) Free Course Module: Generative Models and Their Applications

- Platform: DeepLearning.AI (offered through Coursera)

- Summary: While not specific to RAG, this course covers the foundational knowledge needed to understand generative models and their integration with retrieval mechanisms.

- Link: Access the Course

5) LinkedIn Learning: Introduction to NLP with Chatbots

- Instructor: Jonathan Fernandes

- Summary: This course provides practical insights into building and improving chatbot models, including methods for integrating retrieval-based techniques like RAG to enhance response quality.

- Link: Visit LinkedIn Learning

6) Article: Leveraging Retrieval-Augmented Generation for Enhanced Chatbots

- Source: Towards Data Science

- Summary: A technical breakdown of how RAG works and best practices for implementing it in chatbot frameworks. This article offers step-by-step guidance and use cases relevant to the industry.

- Link: Visit Towards Data Science

7) OpenAI Community Resource: Implementing RAG in Real-World Projects

- Platform: GitHub OpenAI Discussions

- Summary: A community-driven resource that includes discussions, example implementations, and collaborative learning for applying RAG models in real-world projects.

- Link: Explore GitHub Discussions

8. Comprehensive Guide: RAG Research Updates and Resources

- Source: GitHub - Awesome Generative AI Guide

- Summary: This curated guide offers a comprehensive list of research updates and resources related to RAG, including papers, tools, and tutorials. It is an excellent resource for those looking to stay current with the latest developments in RAG research and applications.

- Link: Explore the RAG Research Guide

9. LinkedIn Learning Course: Prompt Engineering – How to Talk to the AIs

- Instructor: Ian Barkin

- Summary: This course covers prompt engineering techniques that are crucial for enhancing the performance of generative models like RAG. Understanding how to design effective prompts can significantly improve the chatbot's ability to retrieve and generate accurate, context-rich responses.

Keep Reading

Introducing the CreateAI Builder Marketplace

Faith Timoh Abang

Explore, share, and collaborate on AI projects across ASU with ease

When to Use CreateAI Beta vs. Production: What You Need to Know

Faith Timoh Abang

Since launching CreateAI, we've seen incredible growth from early testers, faculty innovators, and now the broader ASU community. But as more users join the platform, we’re noticing some confusion about the Beta vs. Production environments and it’s time to clear that up.

Here’s the key message:

Unless you're actively testing new features, you should be using the Production version of CreateAI Platform.

Can I Use FERPA Data in CreateAI Builder?

Faith Timoh Abang

Yes, you may use FERPA-regulated student data in CreateAI Builder, but only under specific conditions. This article explains what that means, what protections are in place, and what responsibilities you have as a project owner.